Hi! Welcome. You being here means more than you know. Knowing it lands with someone like you keeps me going. I'm Lavena Xu-Johnson. I write about psychology for founders. Why? Because scaling a business means scaling ourselves first.

Happy Tuesday, founders,

And if you are reading this from the US, a very warm Happy Thanksgiving to you and your loved ones!

Holidays can magnify everything that is already there. Gratitude, and also the quiet exhaustion, the anxiety that flares at 3 a.m., the sense that your mind is carrying more than it knows how to name.

At the same time, we live in a year where your “therapist” might be a chatbot on your phone, and where investors are putting serious money behind AI tools that promise to listen, coach, and even diagnose.

Today I want to step back from the hype and the fear of AI, and look clearly at one question:

What is the actual landscape of AI in mental health right now, and how valid and viable is it as real support?

🤝 This edition is kindly brought to you by Leapsome

Retention looks fine—performance doesn’t. From a survey of 2,400 HR leaders and employees, discover 2026’s hidden risks: quiet disengagement, AI anxiety, and trust gaps—and the plays that reverse them. Get benchmarks, manager scripts, and ready-to-run strategies your HR team can deploy. See how people-first organizations reshape work with clarity, care, and smarter strategies to drive performance through change.

We partner with a select group of brands we use or admire, which keeps this newsletter free and independent. Reach out for your campaign here.

The crisis AI is walking into

The starting point is not the tech itself, it is the scale of the pain.

According to the World Health Organization, in 2021, around 1 in every 7 people worldwide, roughly 1.1 billion, were living with a mental disorder, with anxiety and depression the most common.

The COVID years poured more fuel on it. Global estimates suggest that in 2020, there was about a 26 percent increase in anxiety disorders and a 28 percent increase in major depressive disorders, driven by uncertainty, isolation, and economic stress.

More than a billion people now live with mental health problems, yet in many countries, less than 2 percent of health budgets go to mental health services, and there are often fewer than one mental health professional per 100,000 people.

Have you ever opened up to AI about your problems?

Where AI is already showing up in mental health

If we strip away the buzzwords, most AI in mental health falls into a few buckets: screening and diagnosis, self-guided support, and ongoing monitoring.

The delveInsight analysis on AI in mental health describes how, in 2015, only about 10 percent of mental health professionals reported using AI tools, often simple chatbots for stress relief. By 2024, that figure had risen to over 60 percent, with tools that can analyse speech patterns, writing, and behaviour, and in some studies classify depression or anxiety markers with reported accuracies up to around 90 percent in controlled settings.

1. Screening and early detection

AI systems now analyse:

Speech and voice: Startups like Ellipsis Health and Kintsugi look for vocal “biomarkers” of depression or anxiety during ordinary speech.

Text and chat logs: Tools scan language patterns in messages or journaling for risk signals, including suicidal ideation.

Clinical and brain data: Research groups and companies use machine learning on MRI scans and health records to predict risk for disorders such as schizophrenia, bipolar disorder, or dementia.

The value of AI here is flagging risk before a clinical diagnosis. Instead of someone reaching a breaking point before getting noticed, AI might send signals that “something is off” to a clinician or directly to the individual.

2. Chatbots and “virtual therapists”

Apps like Woebot, Wysa, Youper, and many others offer CBT-style conversations, mood tracking, and coping strategies.

These systems:

Provide structured CBT exercises

Offer 24/7 availability

Help users practice thought reframing, grounding, or breathing techniques

For founders who are used to managing everything alone, having a low-friction space to “talk things out” can feel relieving, especially when the alternative is waiting months for therapy on a waitlist.

3. Wearables and continuous monitoring

AI is also moving into rings, watches, and phones. Devices track sleep, heart rate variability, movement, and sometimes voice, and then use AI models to estimate stress, mood, or relapse risk. Some systems are being trialled to alert clinicians if a patient with bipolar disorder or severe depression shows early signs of crisis.

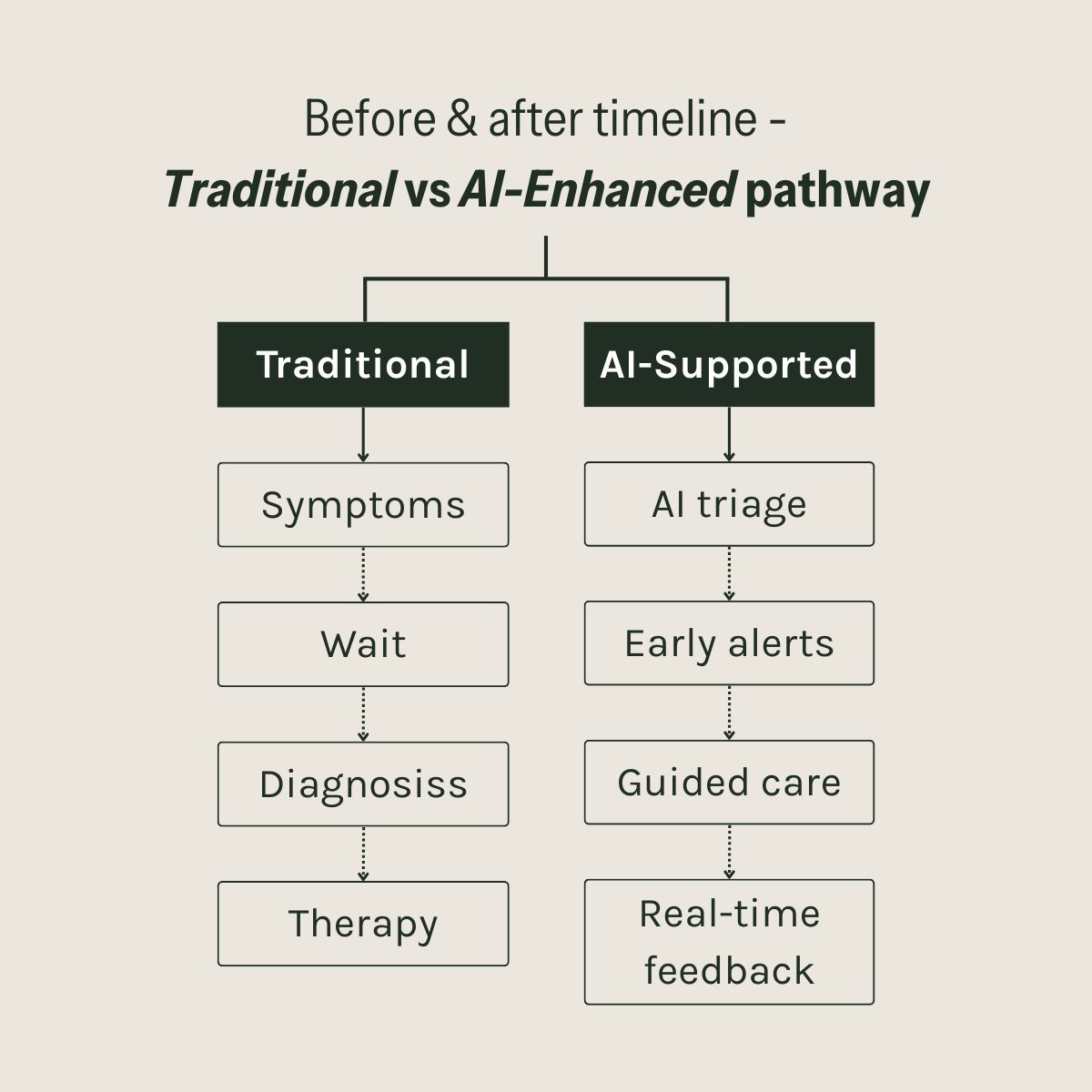

In short, AI is inserting itself at three points in the mental health journey:

Catching risk earlier

Offering scalable self-guided support

Watching for deterioration between human appointments

So, does any of this actually work?

A large systematic review published in 2023 examined AI-based conversational agents for mental health. Across 15 randomized controlled trials, chatbots produced moderate reductions in depression and psychological distress compared with control conditions, with effect sizes roughly in the 0.6 to 0.7 range. This is in the same ballpark as some established digital therapies.

A more recent meta-analysis on AI chatbots for depression and anxiety found benefits as well, but flagged familiar problems: small sample sizes, short follow-up periods, high dropout rates, and a bias toward relatively mild cases.

On the diagnostic side, machine learning models using speech, text, or multimodal inputs can achieve high accuracy on research datasets, but performance drops when tested in real-world, diverse populations.

A 2025 overview in Nature argued that while AI tools show genuine promise in improving diagnosis and access, many are not yet clinically validated in the conditions and populations where they are being marketed, and there is a real risk that benefits are unevenly distributed toward already advantaged groups.

So the evidence right now sounds something like this:

AI chatbots can help reduce symptoms for some people, especially for mild to moderate distress, in the short term.

Early detection models can detect patterns that humans miss, but they are fragile when applied outside the controlled environments in which they were trained.

Very little data exists on long-term outcomes, complex trauma, suicidality, or severe psychiatric illness.

In other words, there is a signal here, it is just not yet the whole story some founders or investors want it to be.

The risks when we outsource our inner life

Regulators have started to recognise that “AI therapists” are not just productivity tools. The US FDA’s Digital Health Advisory Committee recently held dedicated meetings on generative AI-enabled digital mental health devices, focusing on the safety of chatbots that claim to diagnose or treat psychiatric conditions directly with patients.

Professional bodies such as the American Psychological Association and American Psychiatric Association have urged the FDA to tighten oversight, raise evidence standards, and clarify when these tools cross the line into medical devices that must be regulated.

Clinicians are also sensing the potential risk. A recent report highlighted therapists’ concerns that vulnerable people might become emotionally dependent on unsupervised chatbots, receive misleading advice, or have their symptoms amplified instead of contained. Some tragic cases have already driven policy changes in how AI systems respond to users in crisis.

The main risk is not that AI suddenly becomes malicious. It is that:

We overestimate its capacity for nuance and care and treat complex trauma or suicidality as if it were a scheduling problem.

Bias and blind spots get encoded into systems, leading to underdiagnosis or misdiagnosis for certain cultures, languages, or genders.

Data privacy fails in tools that handle deeply sensitive information without robust protections.

If you are already prone to self-reliance and perfectionism, it is tempting to relate to AI as “my therapist who never needs anything from me”, which can quietly reinforce the belief that your inner world should be handled solo, with maximum efficiency and minimum vulnerability.

How to use ‘AI therapists’ safely

1. Be selective and boundary conscious

Before using an AI mental health tool, ask:

Has this product been tested in peer-reviewed studies, or is it mostly marketing language

Who owns my data, where is it stored, and can I delete it

Does this app clearly state what it can and cannot do

Notice your own attachment to it as well. If you feel panicked when you do not check in, or you find yourself hiding your use of the app from people who care about you, perhaps take a mental note and think twice.

2. Pair AI with real relationships

If you are in therapy, ask your therapist whether they are open to integrating data from an app or chatbot into your work together. Some clinicians welcome mood data or journaling that can reveal what happens between sessions.

If you are not in therapy, consider AI as a bridge, not a replacement. It can be the practice ground that makes it easier to eventually sit in a room with another human and say the thing you have never said out loud.

Closing

AI can help with pattern recognition, probability, and prediction. But our nervous system is older than our AI. We heal in relationship, in connection, in safety, in time.

On a week like Thanksgiving, when gratitude posts flood our socials, you do not need to feel grateful for everything. You also do not need to feel ashamed if you are using whatever tools you can to get through. Just keep a note of where you hope a machine will rescue you, and where you might actually be ready to let another human in.

I feel thankful for the teams building better diagnostics and for the therapists who hold our story. We can be interested in AI as a way to close access gaps and still fiercely defend the space where healing is deeply, stubbornly human.

Happy Thanksgiving if you are celebrating. And wherever you are reading from, I am glad you are still here, still thinking about your mind, still building.

How's the depth of today's edition?

Until next week,

Lavena

P.S. If you want to get a founder feature about your own story, reply to this email. If you’d like to reach our newsletter audience (founders, creators, and marketers), click the button below.

If you’re new here, I’m over the moon you’ve joined us! To help me craft content that’s actually useful (and not just noise in your inbox), I’d love it if you took 1 minute to answer this quick survey below. Your insights help shape everything I write.

✨ Insane Media is more than one voice

Dive into our other newsletters - where psychology meets the creator economy, e-commerce marketing, and Human resources.